/ Third stone from the sun suggests a 501 pages idea about (changing) form, color and material ideas as an arise of ideas.

/ Third stone from the sun

2024

for rob hameka (deventer).

Album Cover Direction

2021

Generative works portraying the last flights with radio controlled objects as a narrative for telling a story about Pa (Dad).

Untitled (Pa)

2023

art direction and character development for quest 1607 (upcoming boardgame), generated by curated datasets and algorithms. Source images are from MET-open and Rijksstudio.

Quest 1607 Development

2020

How policies of generative AI can be bypassed to produce content they are not supposed to—according to the companies behind the algorithms and their stated guidelines.

Policy intimacy

2023

ESP32 installation Setup combining xy-data from a certain point of time with film-narrative for a new moving translation.

We're not really strangers

2024

Part of 'Its always the binary (Red)'. Two red flags were featured during the exhibition.

Red R(255), G(0), B(0)

2021

Definite 501 pages idea (book) about (changing) form, color and material.

/ Third stone from the sun

2024

Shortfilm with generated voice-data. Our world, our bodies, our very essence, are all cloaked in the shroud of our own beliefs. We're left with the ability to define and predict. We don't know a single thing.

We know Nothing

2024

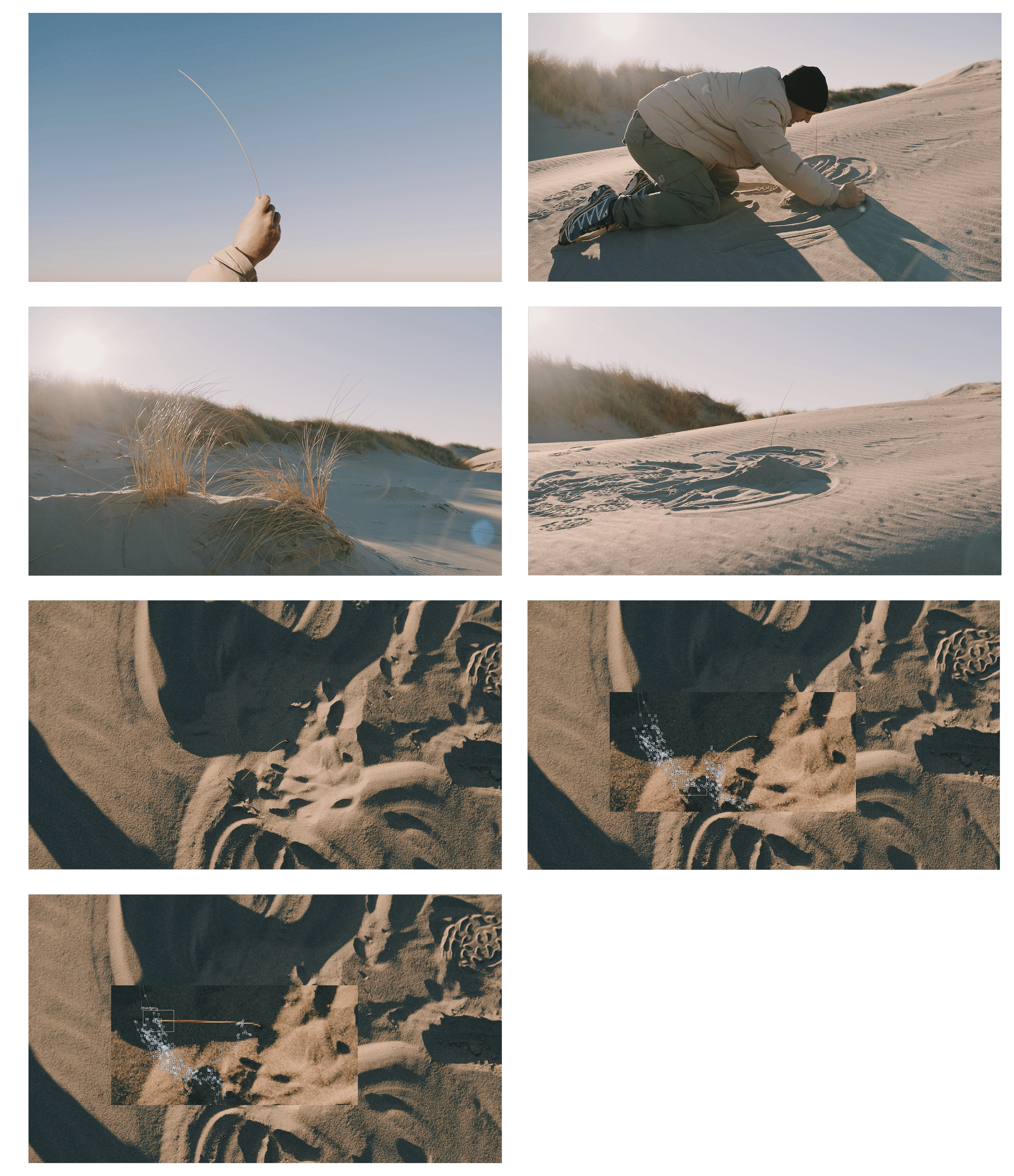

Part of 'We're not really strangers'.

Dune seed shortfilm

2024

A series of lightweight silkscreen prints featuring black letters on a completely black background. The visibility of the prints is highly influenced by the available light, due to the layered use of identical pigments.

Tabula Rasa

2021

Drones performing choreographed movements with letters as indicators of language and sound.

Somehow everywhere

2025

Questioning binary code and the copyright of color. By altering the final bits in the binary data behind color, computers can generate entirely different binary strings that produce the exact same visual output — including an identical R(255), G(0), B(0) result.

Its always the binary (Red)

2021